Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness. The act of creating such a deepfake is called face swapping. Deepfakes can be used for a variety of purposes, including entertainment, education, and research. However, they can also be used for malicious purposes, such as spreading misinformation or creating fake news. It is important to be able to tell the difference between real and deepfake content for a number of reasons. Deepfakes can be used to spread misinformation and propaganda. For example, a deepfake could be used to create a video of a politician saying something they never actually said. This could be used to damage the politician’s reputation or to influence the outcome of an election. Deepfakes can also be used to bully or harass people. For example, a deepfake could be used to create a video of someone saying or doing something embarrassing. This could be used to humiliate the person or to damage their reputation. Find out if your watching AI with Intel’s newest tech.

FakeCatcher is on the case

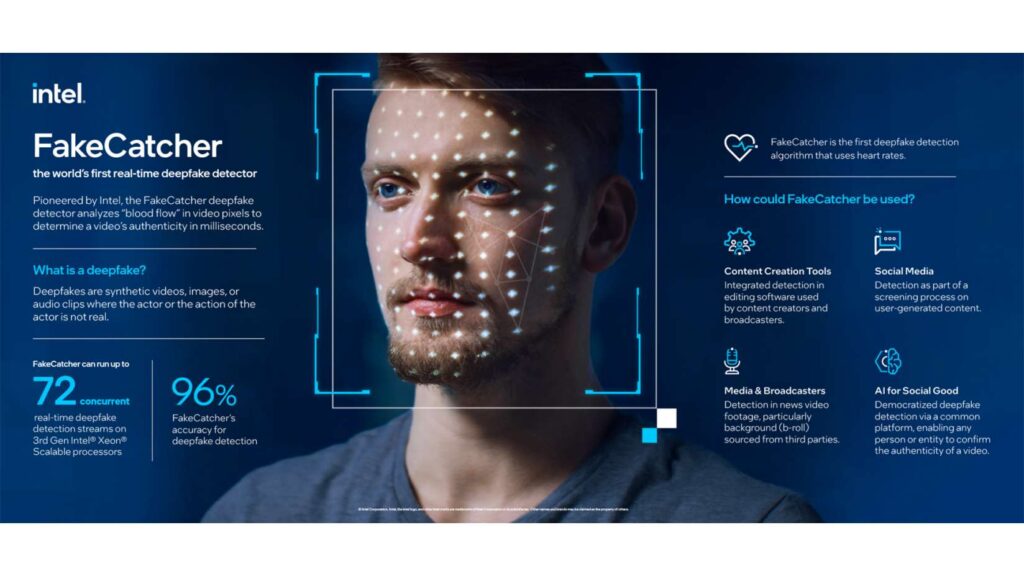

As part of Intel’s Responsible AI work, the company has productized FakeCatcher, a technology that can detect fake videos with a 96% accuracy rate. Intel’s deepfake detection platform is the world’s first real-time deepfake detector that returns results in milliseconds.

How FakeCatcher Works

Intel’s real-time platform uses FakeCatcher, a detector designed by Demir in collaboration with Umur Ciftci from the State University of New York at Binghamton. Using Intel hardware and software, it runs on a server and interfaces through a web-based platform. On the software side, an orchestra of specialist tools form the optimized FakeCatcher architecture. Teams used OpenVino™ to run AI models for face and landmark detection algorithms. Computer vision blocks were optimized with Intel® Integrated Performance Primitives (a multi-threaded software library) and OpenCV (a toolkit for processing real-time images and videos), while inference blocks were optimized with Intel® Deep Learning Boost and with Intel® Advanced Vector Extensions 512, and media blocks were optimized with Intel® Advanced Vector Extensions 2. Teams also leaned on the Open Visual Cloud project to provide an integrated software stack for the Intel® Xeon® Scalable processor family. On the hardware side, the real-time detection platform can run up to 72 different detection streams simultaneously on 3rd Gen Intel® Xeon® Scalable processors.

Most deep learning-based detectors look at raw data to try to find signs of inauthenticity and identify what is wrong with a video. In contrast, FakeCatcher looks for authentic clues in real videos, by assessing what makes us human— subtle “blood flow” in the pixels of a video. When our hearts pump blood, our veins change color. These blood flow signals are collected from all over the face and algorithms translate these signals into spatiotemporal maps. Then, using deep learning, we can instantly detect whether a video is real or fake.

Learn More

There are several potential use cases for FakeCatcher. Social media platforms could leverage the technology to prevent users from uploading harmful deepfake videos. Global news organizations could use the detector to avoid inadvertently amplifying manipulated videos. And nonprofit organizations could employ the platform to democratize detection of deepfakes for everyone. If you would like to learn more about FakeCatcher check in out on Intel’s Site. If you would like to learn more about more AI TV click here.